Last December, I had the incredible opportunity to be part of something truly special. The NIH Office of Data Science Strategy (ODSS) and the National Cancer Institute (NCI) gathered 27 of us from wildly different fields for a five-day Innovation Lab. The goal? To answer a question that sounds like science fiction: How can quantum computing solve today’s most complex biomedical challenges?

The room buzzed with a vibrant mix of quantum physicists, computer scientists (both quantum and traditional computing), computational physicists, computational biologists, data scientists, and biomedical researchers. For five intense days, we were immersed in a whirlwind of collaboration, brainstorming, and problem-solving. The energy was electric as we united to bridge the gap between our disciplines and forge new paths for the future of medicine.

To catalyze our efforts, the NIH sponsored a challenge prize competition, dedicating $100,000 to the most promising projects developed during the lab. It wasn’t just about the funding; it was a powerful validation of the ideas born from this unique collaborative environment.

Our Journey: Team Quantum Heart

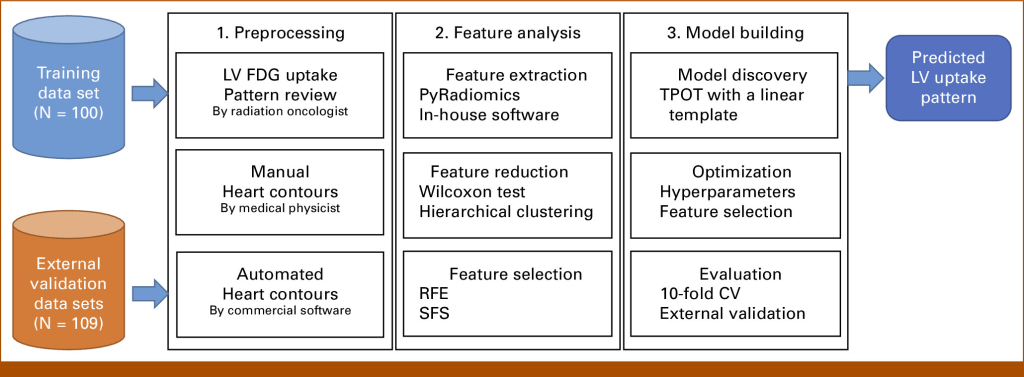

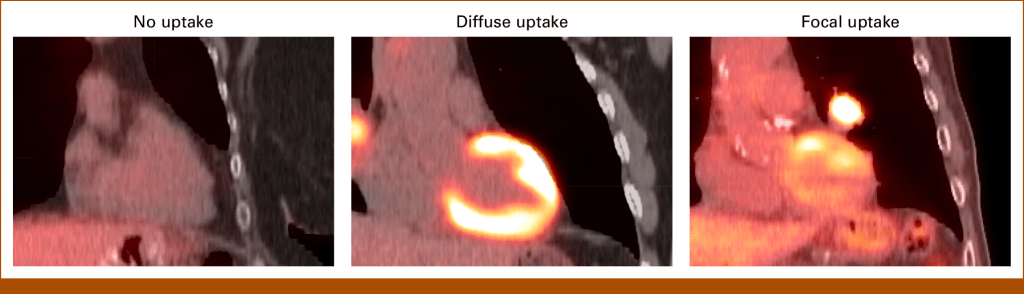

I am thrilled to announce that my team, Team Quantum Heart, was one of the three teams to receive the top prize of $25,000. Our project seeks to revolutionize the existing clinical decision-making framework by leveraging the unique strengths of quantum computing. It was a privilege to collaborate with the team:

- Iman Borazjani, PhD, from Texas A&M University, Team leader

- Wookjin Choi, PhD, from Sidney Kimmel Medical College at Thomas Jefferson University

- Jiaqi (Jimmy) Leng, PhD, from the University of California, Berkeley

- Zhenhua Jiang, PhD, from the University of Dayton Research Institute

Together, our diverse expertise in medical physics, AI, fluid simulations, and quantum algorithms allowed us to develop a concept we believe can make a real-world impact on patient care. This prize is not just an award; it’s the fuel that will help us propel our research forward.

The Road Ahead

Leaving the Innovation Lab, I felt a profound sense of optimism. This event was more than just a competition; it was the formation of a new community. The connections made and the ideas sparked over those five days have laid the groundwork for years of future research.

The convergence of quantum computing and biomedical science is no longer a distant dream. It is happening now, and I am honored to be a part of it. On behalf of Team Quantum Heart, thank you to the NIH for this incredible opportunity.